目录

SURF简介

SURF(Speeded Up Robust Features)是一种计算机视觉中的局部特征检测器和描述符。它于2006年由Herbert Bay等人首次提出,旨在加速SIFT(Scale-Invariant Feature Transform)算法。SURF算法在保持高性能的同时,显著提高了计算速度。

SURF的主要目标是在不同的图像中检测和匹配关键点。这些关键点是图像中独特和稳定的特征,即使在图像发生旋转、缩放或光照变化时也能保持不变。

SURF的特点

- 速度快:SURF比SIFT快很多,这主要归功于其使用积分图像和盒子滤波器。

- 尺度和旋转不变性:SURF能够检测和描述在不同尺度和旋转下的特征点。

- 光照和视角变化的鲁棒性:对光照变化和小幅度的视角变化具有良好的适应性。

- 特征描述符:SURF生成64维或128维的特征描述符,用于后续的匹配过程。

- 基于Haar小波:使用Haar小波响应来进行特征描述,提高了效率。

- 多尺度分析:通过构建尺度空间金字塔来实现多尺度特征检测。

在OpenCvSharp中使用SURF

在OpenCvSharp中使用SURF需要注意,由于专利原因,SURF算法在OpenCV 3.0之后的版本中被移到了opencv_contrib模块。因此,要使用SURF,你需要确保你的OpenCvSharp版本包含了这个额外模块。

以下是在OpenCvSharp中使用SURF的基本步骤:

引入必要的命名空间:

C#using OpenCvSharp;

using OpenCvSharp.XFeatures2D;

创建SURF对象:

C#var surf = SURF.Create(hessianThreshold: 100);

检测关键点:

C#using var image = new Mat("path/to/your/image.jpg", ImreadModes.Grayscale);

KeyPoint[] keypoints = surf.Detect(image);

计算描述符:

C#Mat descriptors = new Mat();

surf.Compute(image, ref keypoints, descriptors);

SURF应用示例

特征点检测和描述

以下是一个完整的例子,展示如何使用SURF检测图像中的特征点并计算其描述符:

C#public partial class Form4 : Form

{

private Mat originalImage;

public Form4()

{

InitializeComponent();

}

private void Form4_Load(object sender, EventArgs e)

{

}

private void btnLoadImage_Click(object sender, EventArgs e)

{

using (OpenFileDialog ofd = new OpenFileDialog())

{

ofd.Filter = "Image Files|*.jpg;*.jpeg;*.png;*.bmp";

if (ofd.ShowDialog() == DialogResult.OK)

{

originalImage = new Mat(ofd.FileName, ImreadModes.Color);

DisplayImage(originalImage);

btnDetectFeatures.Enabled = true;

}

}

}

private void btnDetectFeatures_Click(object sender, EventArgs e)

{

if (originalImage == null) return;

try

{

using (var grayImage = new Mat())

{

// 转换为灰度图

Cv2.CvtColor(originalImage, grayImage, ColorConversionCodes.BGR2GRAY);

// 创建SURF对象

var surf = SURF.Create(hessianThreshold: 100);

// 检测关键点

KeyPoint[] keypoints = surf.Detect(grayImage);

// 计算描述符

Mat descriptors = new Mat();

surf.Compute(grayImage, ref keypoints, descriptors);

// 在图像上绘制关键点

using (var outputImage = originalImage.Clone())

{

Cv2.DrawKeypoints(grayImage, keypoints, outputImage, new Scalar(0, 255, 0), DrawMatchesFlags.DrawRichKeypoints)

DisplayImage(outputImage);

MessageBox.Show($"检测到 {keypoints.Length} 个关键点\n描述符维度: {descriptors.Cols}",

"Detection Results", MessageBoxButtons.OK, MessageBoxIcon.Information);

}

}

}

catch (Exception ex)

{

MessageBox.Show($"Error: {ex.Message}", "Error", MessageBoxButtons.OK, MessageBoxIcon.Error);

}

}

private void DisplayImage(Mat image)

{

try

{

using (var bitmap = BitmapConverter.ToBitmap(image))

{

if (pic.Image != null)

{

pic.Image.Dispose();

}

pic.Image = new Bitmap(bitmap);

}

}

catch (Exception ex)

{

MessageBox.Show($"Error displaying image: {ex.Message}");

}

}

protected override void OnFormClosing(FormClosingEventArgs e)

{

base.OnFormClosing(e);

originalImage?.Dispose();

}

}

这个例子首先加载一张灰度图像,然后使用SURF检测关键点并计算描述符。最后,它在原始彩色图像上绘制这些关键点,并保存结果。

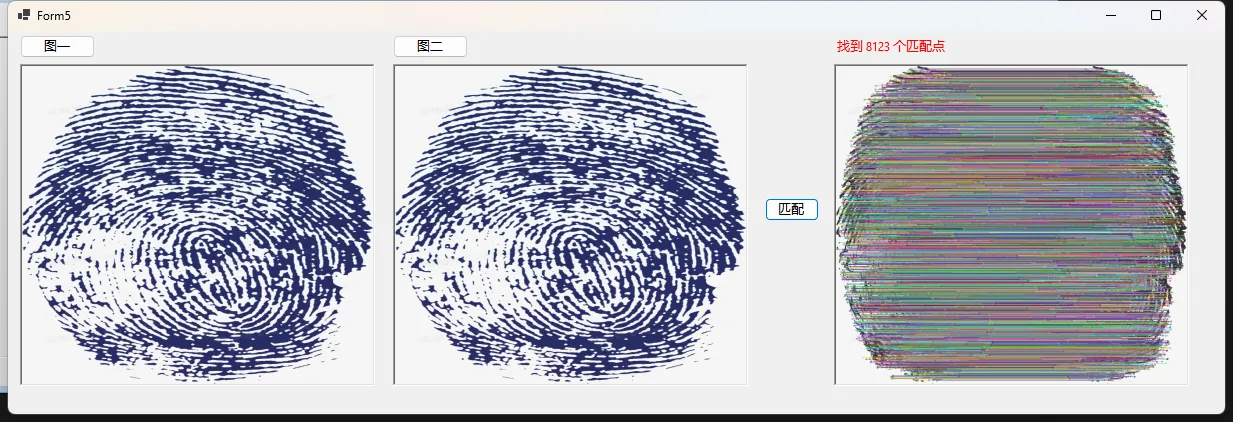

图像匹配

SURF常用于图像匹配任务。以下是一个使用SURF进行图像匹配的示例:

C#public partial class Form5 : Form

{

private string imagePath1;

private string imagePath2;

public Form5()

{

InitializeComponent();

}

// 选择第一张图片

private void btnSelectImage1_Click(object sender, EventArgs e)

{

using (OpenFileDialog ofd = new OpenFileDialog())

{

ofd.Filter = "图片文件|*.jpg;*.png;*.bmp";

if (ofd.ShowDialog() == DialogResult.OK)

{

imagePath1 = ofd.FileName;

pic1.ImageLocation = imagePath1;

}

}

}

// 选择第二张图片

private void btnSelectImage2_Click(object sender, EventArgs e)

{

using (OpenFileDialog ofd = new OpenFileDialog())

{

ofd.Filter = "图片文件|*.jpg;*.png;*.bmp";

if (ofd.ShowDialog() == DialogResult.OK)

{

imagePath2 = ofd.FileName;

pic2.ImageLocation = imagePath2;

}

}

}

private void btnMatch_Click(object sender, EventArgs e)

{

if (string.IsNullOrEmpty(imagePath1) || string.IsNullOrEmpty(imagePath2))

{

MessageBox.Show("请先选择两张图片!");

return;

}

try

{

// 读取图片并转换为灰度图

using (var img1 = new Mat(imagePath1, ImreadModes.Grayscale))

using (var img2 = new Mat(imagePath2, ImreadModes.Grayscale))

{

// 创建SURF特征检测器

var surf = SURF.Create(hessianThreshold: 100);

// 检测特征点和计算描述符

KeyPoint[] keypoints1, keypoints2;

Mat descriptors1 = new Mat();

Mat descriptors2 = new Mat();

surf.DetectAndCompute(img1, null, out keypoints1, descriptors1);

surf.DetectAndCompute(img2, null, out keypoints2, descriptors2);

// 创建特征匹配器

var matcher = new BFMatcher();

DMatch[] matches = matcher.Match(descriptors1, descriptors2);

// 绘制匹配结果

var imgMatches = new Mat();

Cv2.DrawMatches(img1, keypoints1, img2, keypoints2, matches, imgMatches);

// 保存并显示结果

string resultPath = "matches_result.jpg";

imgMatches.SaveImage(resultPath);

pictureBoxResult.ImageLocation = resultPath;

// 显示匹配点数量

lblResult.Text = $"找到 {matches.Length} 个匹配点";

}

}

catch (Exception ex)

{

MessageBox.Show($"处理过程中出错:{ex.Message}");

}

}

}

这个例子加载两张图片,使用SURF检测和描述特征点,然后使用暴力匹配器(BFMatcher)找到两张图片之间的匹配点。

对象识别

SURF可以用于简单的对象识别任务。以下是一个基本的对象识别示例:

C#using OpenCvSharp;

using OpenCvSharp.XFeatures2D;

using System;

class Program

{

static void Main(string[] args)

{

using (var objectImage = new Mat("pcb_layout.png", ImreadModes.Grayscale))

using (var sceneImage = new Mat("template.png", ImreadModes.Grayscale))

{

var surf = SURF.Create(hessianThreshold: 100);

KeyPoint[] objectKeypoints, sceneKeypoints;

Mat objectDescriptors = new Mat();

Mat sceneDescriptors = new Mat();

surf.DetectAndCompute(objectImage, null, out objectKeypoints, objectDescriptors);

surf.DetectAndCompute(sceneImage, null, out sceneKeypoints, sceneDescriptors);

var matcher = new FlannBasedMatcher();

var matches = matcher.KnnMatch(objectDescriptors, sceneDescriptors, 2);

var goodMatches = new List<DMatch>();

foreach (var m in matches)

{

if (m[0].Distance < 0.7 * m[1].Distance)

{

goodMatches.Add(m[0]);

}

}

if (goodMatches.Count >= 4)

{

Point2f[] obj = new Point2f[goodMatches.Count];

Point2f[] scene = new Point2f[goodMatches.Count];

for (int i = 0; i < goodMatches.Count; i++)

{

obj[i] = objectKeypoints[goodMatches[i].QueryIdx].Pt;

scene[i] = sceneKeypoints[goodMatches[i].TrainIdx].Pt;

}

var homography = Cv2.FindHomography(InputArray.Create(obj), InputArray.Create(scene), HomographyMethods.Ransac);

var objCorners = new Point2f[4];

objCorners[0] = new Point2f(0, 0);

objCorners[1] = new Point2f(objectImage.Cols, 0);

objCorners[2] = new Point2f(objectImage.Cols, objectImage.Rows);

objCorners[3] = new Point2f(0, objectImage.Rows);

var sceneCorners = Cv2.PerspectiveTransform(objCorners, homography);

using (var result = sceneImage.CvtColor(ColorConversionCodes.GRAY2BGR))

{

// 绘制绿色边框

Cv2.Line(result, (Point)sceneCorners[0], (Point)sceneCorners[1], new Scalar(0, 255, 0), 4);

Cv2.Line(result, (Point)sceneCorners[1], (Point)sceneCorners[2], new Scalar(0, 255, 0), 4);

Cv2.Line(result, (Point)sceneCorners[2], (Point)sceneCorners[3], new Scalar(0, 255, 0), 4);

Cv2.Line(result, (Point)sceneCorners[3], (Point)sceneCorners[0], new Scalar(0, 255, 0), 4);

// 添加文本标注

Cv2.PutText(result, "Detected Object", (Point)sceneCorners[0], HersheyFonts.HersheyComplex, 1, new Scalar(0, 255, 0), 2);

// 显示结果窗口

Cv2.ImShow("Detection Result", result);

Cv2.WaitKey(0); // 等待按键

Cv2.DestroyAllWindows(); // 关闭所有窗口

// 保存结果

result.SaveImage("object_detection_result.jpg");

}

Console.WriteLine("对象已在场景中检测到并标记");

}

else

{

Console.WriteLine("没有找到足够的好匹配点");

// 显示未找到对象的信息

using (var result = sceneImage.CvtColor(ColorConversionCodes.GRAY2BGR))

{

Cv2.PutText(result, "No Object Found", new Point(50, 50), HersheyFonts.HersheyComplex, 1, new Scalar(0, 0, 255), 2);

Cv2.ImShow("Detection Result", result);

Cv2.WaitKey(0);

Cv2.DestroyAllWindows();

}

}

}

}

}

这个例子首先加载一个对象图像和一个场景图像。它使用SURF检测两个图像中的特征点,然后使用FLANN(Fast Library for Approximate Nearest Neighbors)匹配器找到最佳匹配。之后,它使用RANSAC算法计算单应性矩阵,并在场景图像中绘制检测到的对象的边界框。

图像拼接

SURF还可以用于图像拼接任务。以下是一个简单的图像拼接示例:

C#using OpenCvSharp;

using OpenCvSharp.XFeatures2D;

class Program

{

static void Main(string[] args)

{

using (var img1 = new Mat("image1.jpg", ImreadModes.Color))

using (var img2 = new Mat("image2.jpg", ImreadModes.Color))

{

var gray1 = img1.CvtColor(ColorConversionCodes.BGR2GRAY);

var gray2 = img2.CvtColor(ColorConversionCodes.BGR2GRAY);

var surf = SURF.Create(hessianThreshold: 100);

KeyPoint[] keypoints1, keypoints2;

Mat descriptors1 = new Mat();

Mat descriptors2 = new Mat();

surf.DetectAndCompute(gray1, null, out keypoints1, descriptors1);

surf.DetectAndCompute(gray2, null, out keypoints2, descriptors2);

var matcher = new BFMatcher();

var matches = matcher.KnnMatch(descriptors1, descriptors2, 2);

var goodMatches = new List<DMatch>();

foreach (var m in matches)

{

if (m[0].Distance < 0.7 * m[1].Distance)

{

goodMatches.Add(m[0]);

}

}

var srcPoints = new Point2f[goodMatches.Count];

var dstPoints = new Point2f[goodMatches.Count];

for (int i = 0; i < goodMatches.Count; i++)

{

srcPoints[i] = keypoints1[goodMatches[i].QueryIdx].Pt;

dstPoints[i] = keypoints2[goodMatches[i].TrainIdx].Pt;

}

var homography = Cv2.FindHomography(InputArray.Create(srcPoints), InputArray.Create(dstPoints), HomographyMethods.Ransac);

var result = new Mat();

Cv2.WarpPerspective(img1, result, homography, new Size(img1.Cols + img2.Cols, img1.Rows));

img2.CopyTo(result[new Rect(0, 0, img2.Cols, img2.Rows)]);

result.SaveImage("stitched_image.jpg");

Console.WriteLine("图像拼接完成");

}

}

}

这个例子加载两张图片,使用SURF检测特征点并计算描述符。然后,它使用这些特征点来计算两张图片之间的单应性矩阵,并使用这个矩阵来将第一张图片变换到第二张图片的坐标系中。最后,它将两张图片拼接在一起。

SURF vs SIFT

虽然SURF和SIFT都是强大的特征检测和描述算法,但它们有一些关键的区别:

- 速度:SURF通常比SIFT快3倍左右。

- 特征描述符:SIFT使用128维描述符,而SURF通常使用64维描述符(也可以使用128维)。

- 尺度空间表示:SIFT使用高斯差分(DoG),而SURF使用盒子滤波器的近似。

- 特征点定位:SURF使用Hessian矩阵行列式的近似,而SIFT使用DoG极值。

- 旋转不变性:SURF可以选择是否计算旋转不变特征,而SIFT总是计算旋转不变特征。

- 专利:SIFT是专利算法,而SURF也曾经是专利算法,但现在专利已经过期。

SURF的优化和变体

- U-SURF:这是SURF的一个变体,不计算特征点的主方向,因此不具有旋转不变性,但速度更快。

- SURF-128:这是SURF的一个变体,使用128维描述符而不是标准的64维。它提供了更高的独特性,但计算成本更高。

- G-SURF:Gauge SURF是SURF的一个变体,它使用规范化的导数来提高对图像变形的鲁棒性。

- CUDA SURF:这是SURF的GPU加速版本,可以显著提高处理速度,特别是对于大型图像或实时应用。

- ORB:虽然不是SURF的直接变体,但ORB(Oriented FAST and Rotated BRIEF)可以看作是SURF和SIFT的一个替代品,它结合了FAST关键点检测器和BRIEF描述符,速度更快,而且是免费的。

SURF的局限性

尽管SURF是一个强大的算法,但它也有一些局限性:

- 专利问题:虽然SURF的专利已经过期,但在某些情况下使用它可能仍然存在法律风险。

- 非线性变换:对于严重的非线性图像变换(如严重的透视变化或弯曲),SURF的性能可能会下降。

- 计算复杂度:虽然SURF比SIFT快,但对于某些实时应用来说,它可能仍然太慢。

- 内存使用:SURF需要存储积分图像,这可能会增加内存使用。

- 参数敏感性:SURF的性能对其参数(如Hessian阈值)相当敏感,可能需要针对特定应用进行调整。

结论

SURF是一种强大而高效的特征检测和描述算法,在计算机视觉领域有广泛的应用。它在速度上相比SIFT有显著提升,同时保持了良好的性能。在OpenCvSharp中,我们可以方便地使用SURF进行各种任务,如特征点检测、图像匹配、对象识别和图像拼接等。

然而,SURF并非万能的。在选择使用SURF时,需要考虑具体的应用场景、性能需求、以及可能的法律问题。对于一些特定的应用,可能需要考虑SURF的变体或其他替代算法,如ORB。

总的来说,SURF为计算机视觉任务提供了一个强大的工具,特别是在OpenCvSharp这样的高级库的支持下,我们可以轻松地在C#项目中实现复杂的计算机视觉功能。随着计算机视觉技术的不断发展,我们可以期待看到更多基于SURF的创新应用和改进。

本文作者:技术老小子

本文链接:

版权声明:本博客所有文章除特别声明外,均采用 BY-NC-SA 许可协议。转载请注明出处!